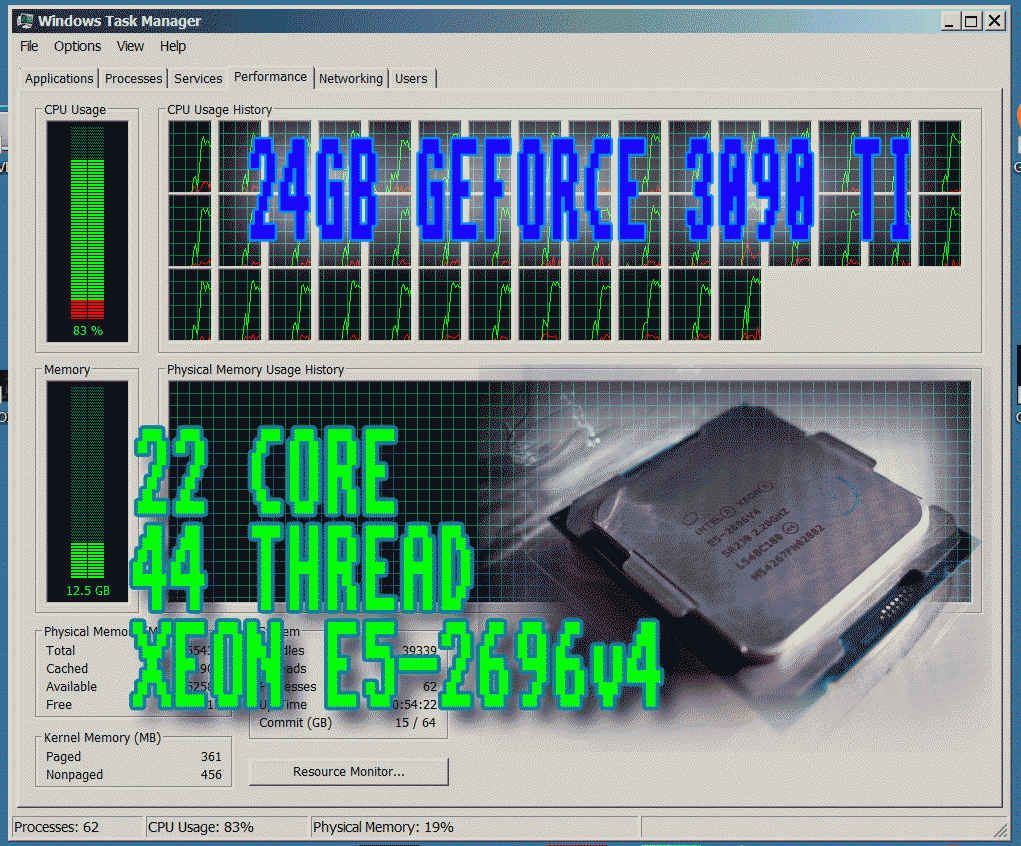

24gb Geforce 3090Ti On Xeon E5-2696v4 ▀ How Cool Is That?

¶ PREFACE NOTE

You obviously thinking something like:

Man, this is universally stupid, because Xeon E5 platform is based around PCIe 3.0 [15.75Gb/s] and 3090Ti is PCIe 4.0 [31.5 Gb/s] card

But i need to check this out no matter the cost.

Why? Coz i’m a weirdo, remember?

█ COMPUTER CONFIGURATIONS

[1] ► Intel Xeon E5-2696v4 / Asrock X99 Extreme 4 / 64Gb DDR4 2400Mhz / Geforce 3090Ti 24Gb Stock

[2] ► Ryzen 9 5950X PBO OC / Gigabyte Aorus Ultra X570 / 64Gb DDR4 3600@3800Mhz / Radeon 6900XT 16Gb OC

PSU: Seasonic 1300Watt Gold [in both cases]

GFX SETTINGS:

2560x1600 32Bit color [where possible], ULTRA+ settings, Anti-aliasing is turned OFF

SOFTWARE:

Operating system: Windows 7 SP2+ ESU. All software were patched to the latest versions.

▒ 3DMARK 1999 MAX

| TEST | 1 | 2 |

|---|---|---|

| 3D | 6163 | 34 |

| CPU | 110714 | 904 |

Modern platforms like X570 can’t be measured correctly due to program age and it’s limitations. X570 chipset is no go for 3DMark 1999.

▒ 3DMARK 2000

| TEST | 1 | 2 |

|---|---|---|

| SCORE | 56622 | 44234 |

[2] ► Threadripper 1950X / Asrock Taichi X399M / 64Gb DDR4 2667@2800Mhz / Radeon 6900XT 16Gb.

For some reason modern Ryzen was not capable to run this test and 3090 can output only 1920x1200 resolution.

As you can observe old software loves Intel ecosystem.

▒ 3DMARK 2001 SE

| TEST | 1 | 2 |

|---|---|---|

| SCORE | 57539 | 68269 |

3DMark 2001 SE prefers high-clocked Ryzen.

3DMARK 2003

| TEST | 1 | 2 |

|---|---|---|

| SCORE | 123455 | 100692 |

Adequate measurement on all platforms, it looks like 3Dmark 2003 is the most oldest benchmark, which can handle correctly not only classic systems but modern ones too.

3DMARK 2005

| TEST | 1 | 2 |

|---|---|---|

| SCORE | 31648 | 53898 |

Single thread inefficiency of older Xeon showing it’s signs.

3DMARK 2006

| TEST | 1 | 2 |

|---|---|---|

| 3D | 28028 | 48450 |

| SM2 | 9201 | 15926 |

| SM3 | 13886 | 24966 |

| CPU | 9638 | 14960 |

Ryzen supremacy here!

3DMARK VANTAGE [2007]

| TEST | 1 | 2 |

|---|---|---|

| GPU | 51722 | 68176 |

| CPU | 122057 | 78292 |

Xeon beats Ryzen? Considering result as a glitch.

3DMARK 2011

| TEST | 1 | 2 |

|---|---|---|

| GS | 17113 | 15727 |

| PS | 17516 | 26659 |

| CS | 14359 | 19466 |

| GT1 [fps] | 87 | 81 |

| GT2 [fps] | 81 | 78 |

| GT3 [fps] | 102 | 86 |

| GT4 [fps] | 50 | 46 |

| PT [fps] | 55 | 84 |

| CT [fps] | 67 | 91 |

In some tests 3090Ti surpass 6900XT on modern Ryzen system even despite the fact that GPU is limited by an older Xeon platform. 384-bit bus and 1 TB/s bandwidth kick ass!

3DMARK 2021

| TEST | 1 | 2 |

|---|---|---|

| Fire Strike Ultra | 20616/22770/9470 | 24944/41386/12406 |

| Sky Diver | 98656/31849/33709 | 96209/48837/53051 |

| Cloud Gate | 42905/11872 | 45127/23499 |

| Ice Storm Extreme | 286819/48329 | 449545/89352 |

| DX11 Single Thread | 2652940 | 1288261 |

| DX11 Multi Thread | 3480798 | 2049429 |

| Vulkan | 32512467 | 34316979 |

Xeon old-timer refuses to die and make some competition even in the most modern benchmark! DirectX11 calls are way more efficient comparing to Ryzen platform. Vulkan calls are almost on par. Simply can’t believe it.

▒ UNIGINE TROPICS [2008]

| TEST | 1 | 2 |

|---|---|---|

| FPS | 316 | 461 |

| MARK | 7971 | 11631 |

▒ UNIGINE HEAVEN [2009]

| TEST | 1 | 2 |

|---|---|---|

| FPS | 187 | 186 |

| SCORE | 4718 | 4694 |

| MIN FPS | 10 | 48 |

| MAX FPS | 317 | 403 |

We can observe how old Xeon is holding powerful 3090Ti back.

▒ UNIGINE VALLEY [2013]

| TEST | 1 | 2 |

|---|---|---|

| FPS | 108 | 191 |

| SCORE | 4513 | 8000 |

| MIN FPS | 22 | 50 |

| MAX FPS | 173 | 310 |

Same thing as in Heaven benchmark.

▒ UNIGINE SUPERPOSITION [2017]

| TEST | 1 | 2 |

|---|---|---|

| SCORE | 7829 | 15016 |

Benchmark definitely wants better CPU to reveal full potential of 3090Ti.

▒ STALKER BENCHMARK [2009]

| FPS [Avarage/Maxium] | 1 | 2 |

|---|---|---|

| DAY | 200/434 | 252/635 |

| NIGHT | 236/567 | 259/600 |

| RAIN | 274/677 | 288/640 |

| SUN | 200/428 | 162/430 |

Old benchmark, based upon [X-Ray engine] , which was used in [Stalker:Call of Prypiat] . It doesn’t have multi-threaded support and directed to single core CPUs. As we can see Xeon holds up pretty darn well here, mostly because of old code, which prefer Intel-based instructions.

▒ AIDA64 [2022]

v6.70.6000

| SYSTEM | Xeon E5-2696v4 | 3090Ti [E5-2696v4] | 6900XT [5950X] |

|---|---|---|---|

| Memory Read | 68641 MB/s | 12556 MB/s | 26200 MB/s |

| Memory Write | 59403 MB/s | 11138 MB/s | 25753 MB/s |

| Memory Copy | 62931 MB/s | 852976 MB/s | 1118464 MB/s |

| Single-Precision FLOPS | 1830 GFLOPS | 41841 GFLOPS | 25415 GFLOPS |

| Double-Precision FLOPS | 915 GFLOPS | 656 GFLOPS | 1603 GFLOPS |

| 24-bit Integer IOPS | 458 GIOPS | 21355 GIOPS | 24208 GIOPS |

| 32-bit Integer IOPS | 458 GIOPS | 21355 GIOPS | 5073 GIOPS |

| 64-bit Integer IOPS | 123 GIOPS | 5023 GIOPS | 1316 GIOPS |

| AES-256 | 69967 MB/s | 114211 MB/s | 73809 MB/s |

| SHA-1 | 16728 MB/s | 246440 MB/s | 105498 MB/s |

| Single-Precision Julia | 492 FPS | 5266 FPS | 4883 FPS |

| Double-Precision Mandel | 262 FPS | 124 FPS | 557 FPS |

█ FINAL THOUGHTS

High-end server technologies of 2016 aged very artistically. It’s like a fine old wine.

[Xeon E5 [Broadwell]]

platform can handle any business workloads in 2022 without issues. I’ve tried even [Cyberpunk 2077]

.

Game runs just fine in native 2K resolution [no compromises like DLSS

] with Psycho quality [better than Ultra] showing stable 45-50fps.

I’ll keep the system as my daily driver.

If everything will be fine somewhere around February 2023 i’ll switch to more recent [Threadripper 3955WX]

on [WRX80]

chipset.

Such advancement will unveil octal-channel memory configuration and bring desired PCIe 4.0 to remove bottleneck in GPU subsystem.

And after that, around 2024-2025 will upgrade to top-of-the-line 64-core/128-threads [Threadripper 5995WX]

.

128 threads will look quite nice in Windows 7 task manager. Be ready for more stupid benchmarks!

That’s it for today. Stay safe!